User-agent analysis is one of the powerful methods in threat hunting to identify unusual behaviors that may indicate malicious activity. Each connection tells a story - from the mundane to the suspicious.

In this post, I'll share how we can use user-agent analysis to spot the needles in the haystack. We'll explore from basic statistical techniques to a real-world case where an unusual user-agent pattern helped me find unauthorized remote access software unusual to our environment. While we can hunt for attackers who try to hide from fancy detection tools, user-agent analysis can also reveal activities hiding in plain sight.

TL;DR:

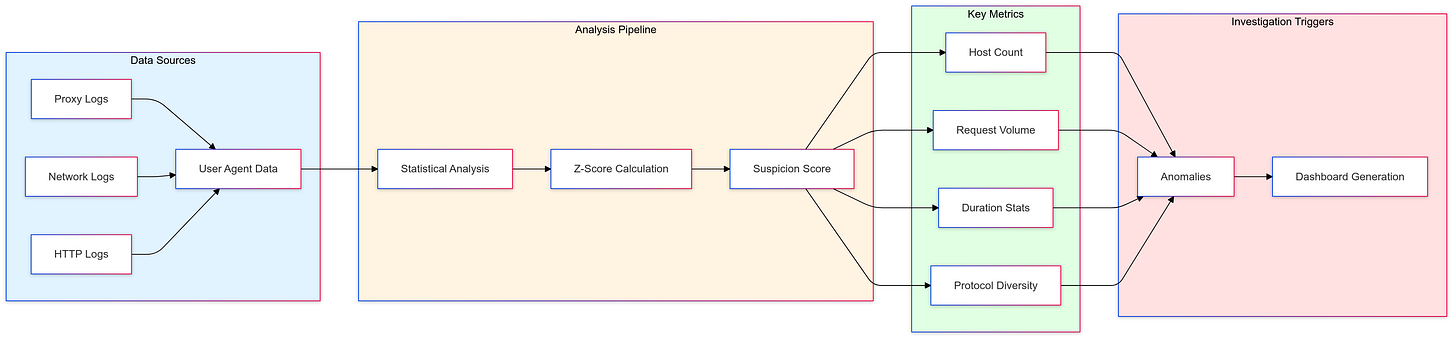

What: An attempt to find suspicious activity through user-agent analysis in proxy/network logs

Why: User-agents can help us identify unauthorized tools and suspicious behavior

How: Using statistical analysis (Z-scores) and a custom suspicion scoring to identify anomalies

Win: found unauthorized remote access software (RemotePC) used by a compromised host via WebSocket user-agent pattern

Takeaways: Includes SQL queries, user agent investigation decision tree, and common false positive examples

Why it matters to us:

Finding Anomalies: Rare or uncommon user-agents like those associated with remote management (RMMs) or automation tools and might help identify unauthorized or compromised users/hosts.

Identifying Pattern: Statistical analysis of user-agents helps detect deviations from the normal in our environment. Metrics like suspicion scores (which we’ll discuss in more detail later), unique hosts, total requests, and z-scores quantify anomalies. For example, an unauthorized remote management tool’s user-agent with an low or in-bursts "active_days_z" score and only observed from single user/host could indicate potentially suspicious activity.

Behavioral indicators: Combining user-agent data with metrics like total requests and unique hosts provides a comprehensive view of user behavior. A high "unique_hosts" count with a remote access user-agent might suggest “normal” usage in our environment. In contrast, a low "unique_hosts" count could indicate a one-off occurrence or “unauthorized” in the environment.

Let’s play with some Stats:

Gathering data:

We are collecting proxy or network data with user agent info in our SIEM. This data typically includes username, IP, protocol, category, HTTP method, duration, bytes in and out, and other relevant details. Most proxy services like Forcepoint Websense and Imperva provide this data. If a data source only has user agent and IP information, we can cross-correlate it with other sources to obtain user and host details. For simplicity, we can also use the IP address as a means to count as an "entity".

The initial query to gather user agents would be something like:

SELECT

dst_host,

user_name,

event_time,

duration,

http_method,

protocol,

ua

FROM logs, UNNEST(user_agent) AS ua // because for me user_agent is an array field. remove it if its not in your dataset

To better organize and process data, we use Common Table Expressions (CTEs) in SQL. We then use the info from the above CTE to create user agent-specific metrics likeunique hosts, usernames, along with averages and standard deviations.

user_agent_stats AS (

SELECT

ua,

COUNT(DISTINCT dst_host) AS unique_hosts,

COUNT(DISTINCT user_name) AS unique_users,

COUNT(*) AS total_requests,

COUNT(DISTINCT DATE(event_time)) AS active_days,

AVG(duration) AS avg_duration,

STDDEV(duration) AS stddev_duration,

COUNT(DISTINCT http_method) AS num_http_methods,

COUNT(DISTINCT protocol) AS num_protocols,

MIN(event_time) AS first_seen,

MAX(event_time) AS last_seen

FROM unnested_logs

GROUP BY ua

Next, we calculate the Z-scores which measure how unusual each user-agent's behavior is compared to the overall environment. A Z-score tells us how many standard deviations a particular value is from the average.

Z-score = (observation - mean) / standard deviation

Typical thresholds:

- |Z| > 2: Unusual

- |Z| > 3: Very unusual

- |Z| > 4: Extremely unusual

In our set, each metric (hosts, users, requests, active days, duration), we:

Subtract the global average (e.g., ua.unique_hosts - gs.avg_unique_hosts)

Divide by the standard deviation ( gs.stddev_unique_hosts)

Use NULLIF() to prevent division by zero errors

(ua.unique_hosts - gs.avg_unique_hosts) / NULLIF(gs.stddev_unique_hosts, 0) AS unique_hosts_z,

(ua.unique_users - gs.avg_unique_users) / NULLIF(gs.stddev_unique_users, 0) AS unique_users_z,

(ua.total_requests - gs.avg_total_requests) / NULLIF(gs.stddev_total_requests, 0) AS total_requests_z,

(ua.active_days - gs.avg_active_days) / NULLIF(gs.stddev_active_days, 0) AS active_days_z,

(ua.avg_duration - gs.global_avg_duration) / NULLIF(gs.stddev_avg_duration, 0) AS avg_duration_z,

LENGTH([ua.ua](<http://ua.ua/>)) AS ua_length

(ua.total_requests / NULLIF(ua.unique_users, 0)) AS requests_per_user,

(ua.unique_hosts / NULLIF(ua.unique_users, 0)) AS hosts_per_user

Understanding suspicion_score

In our example, we created a ‘suspicion_score’ to analyze user-agent strings and identify potentially anomalous activity (this is just a sample one and you can interpret and customize it based on your environment and expertise). It calculates a suspicion score (0 to 1) for potentially anomalous user agents by weighing multiple “attributes”. For example, we gave 15% weight to unusual numbers of hosts, users, requests, and suspicious user agent strings, 10% weight to activity patterns, and 5% weight to consider protocol diversity and user agent categories. These weights are subjective and can be experimented based on the environmental knowledge or goal. This overall score also helps us prioritize our investigations and focus on the most anomalous behaviors first.

(

0.15 * (1 - 1 / (1 + EXP(-ABS(unique_hosts_z) / 10))) +

0.15 * (1 - 1 / (1 + EXP(-ABS(unique_users_z) / 10))) +

0.15 * (1 - 1 / (1 + EXP(-ABS(total_requests_z) / 10))) +

0.10 * (1 - 1 / (1 + EXP(-ABS(active_days_z) / 5))) +

0.10 * (1 - 1 / (1 + EXP(-ABS(avg_duration_z) / 5))) +

0.10 * LEAST(num_http_methods / 10, 1) +

0.05 * LEAST(num_protocols / 5, 1) +

0.15 * CASE WHEN ua_length < 20 OR ua_length > 500 THEN 1 ELSE 0 END +

0.05 * CASE WHEN ua_category = 'other' THEN 1 ELSE 0 END

) AS suspicion_score

suspicion_score variable combines different attributes:

Statistical anomalies: We use Z-scores for unique hosts, users, total requests, active days, and average duration. These help identify outliers in our environment.

HTTP methods and protocols: We consider the variety of methods and protocols used by the user_agent.

User-agent characteristics: We examine the length of the user-agent string and its category, flagging unusually short or long strings and those categorized as "other." However, this approach isn't very effective and can overlook things where a perpetrator or insider uses a slight variation of a "normal" user-agent. But it is good for our example

Why we did what we did?

https://www.datawrapper.de/_/dmTsd/?v=2

An example: Unusual WebSocket Activity

As threat hunting starts with a hypothesis, Mine is based on APEX Framework (A - Analyze Environment, P - Profile Threats, E - Explore Anomalies, X - X-factor Consideration)

[A] Based on our environment's baseline of approved remote management tools and their typical patterns, [P] we hypothesize unauthorized remote access tools are being used for malicious purposes. [E] We expect to find anomalous user agent strings with unusual activity patterns like:

Rare or unique user agents on few or single hosts/users

Inconsistent version patterns across the environment

RMM tool usage

[X] Additional factors include correlation with suspicious domains, unexpected protocol usage, and deviations from known tool signatures.

I noticed a unique UA (User Agent) which was interesting, websocket-sharp/1.0/net45 due to its behavior and sudden spikes in activity from the metrics.

active_days: 14

active_days_z : 6.065310525407249

avg_duration :6.457142857142856

avg_duration_z: -0.5618276785969151

first_seen:2024-10-01T23:11:21Z

hosts_per_user:1

last_seen:2024-10-29T20:17:13Z

num_http_methods: 1

num_protocols: 1

requests_per_user: 35

suspicion_score: 0.514988557364753

total_requests: 35

total_requests_z: -0.00699213278186984

ua_category: other

ua_length: 19

unique_hosts: 1

unique_hosts_z: -0.008524453434536202

unique_users: 1

unique_users_z: -0.016810278172976426

user_agent: websocket-sharp/1.0

This WebSocket client library seems interesting to me because it's not typical in our environment and it only appeared for one user for a short activity period.

Analysis:

Finding anomaly:

websocket-sharp/1.0/net45in our traffic logs suggests either a custom application using it or something to investigateGathering statistics:

Active for 14 days (UA was seen on 2024-10-01T23:11:21Z but actively making requests for 14 days), with an active_days_z score of 6.065,

35 total requests from a single user and host

Utilized only one HTTP method and one protocol

Suspicion score of 0.515, which is an anomalous behavior in our environment

All in all, we can see a link between a single source and some patterns that “deviate” from “normal”. The elevated suspicion score and abnormal active days z-score in our environment helped us to pick this to investigate first out of all the other things in our dataset

Connections: Investigating into unusual WebSocket user agent took an unexpected turn. At first, I thought they might be suspicious, but analysis revealed two really interesting connections.

I found this WebSocket connection that looked a bit suspicious wss://capi.grammarly.com/fpws?accesstoken. Here I was getting excited to find a suspicious WebSocket connection, and it turned out to be someone's grammar checker. it reiterated that not every unusual connection is malicious..

This appears to be a common thing among multiple vendors like mdds-i.fidelity.com, uswest2.calabriocloud.com/api/websocket/sdc/agent as HTTP/s connections.

I did some more digging and found some interesting connections. I found connections to

wss://est-broker39.remotepc.com/proxy/remotehosts?, a domain associated with RemotePC, a remote access service, with single username on a single host.

What we found:

User was infected via a masqueraded name of a RMM tool downloader (additional analysis confirmed this)

Unauthorized use of a legitimate service

Here are some other findings from the same dataset using the same method:

Several outdated versions of multiple RMM tools that are legit in the environment

Usage of other RMM tools that are not approved

Additional Improvements to Experiment With

User-Agent Length

Try dynamic thresholds based on historical data

Create UA length profiles per application category (browsers vs. API clients vs. mobile apps)

Track sudden changes in UA length patterns over time

More stasts

Try percentile-based:

Try Median Absolute Deviation (MAD) to find better outliers

User-Agent Categorization

Try different categorization

Track categories over time

Create allow/deny lists per category based on environmental context

See if you can implement behavioral profiling per category

Suspicious Score

Play with suspicious scores (Z-score-based, Volume-based, Behavioral based) and weights

The current implementation will not work if duration or any other parameters are not present, so, we can experiment to calculate it even any of the parameters are null.

Volume-based patterns:

Sudden spikes

Regular patterns that could indicate automation

Behavioral indicators:

Add enrichments with geo-location for domain, IP , ANS etc

Protocol usage

Common User-Agent Success and False Positive

Here’s a lesson I learned the hard way in threat hunting: defining your success criteria upfront. Before you begin any hunt, make sure to establish clear criteria for what specifically confirms a threat. Second, what might look suspicious but turn out to be normal business activity? This saves time, helps document/cross-check legitimate business activities, and helps focus the investigation on leads. These are based on our hypothesis, anything else you find should be in our threat hunt pipeline and/or marked for future investigations.

Success Criteria:

Unauthorized RMM tool confirmed

Abnormal usage patterns documented

Host/user attribution established

Tool installation timeline determined

False Positive Criteria:

Legitimate business tool

Approved user/purpose

Normal usage pattern

Documentation exists

Example of False Positives or need to be documented:

https://www.datawrapper.de/_/W6weg/

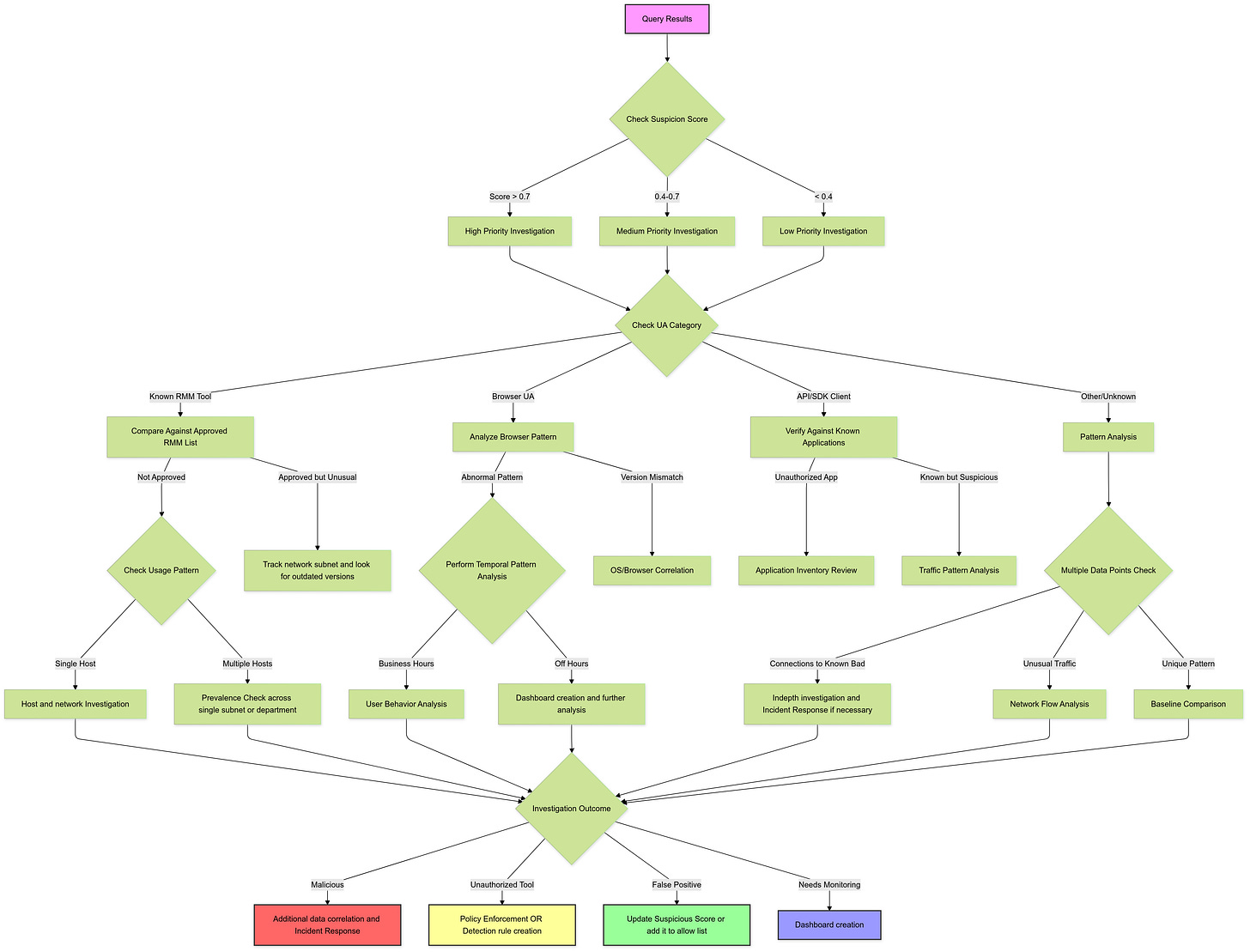

User Agent Analysis Decision Tree

When we spot suspicious user-agents, a decision tree helps us figure out where to start investigating. Start with any user-agent that caught our attention (either because it has a high suspicion score or because it looks weird). Follow the path based on what you find, and make sure to write down everything you do at each step. For instance, when we saw “websocket-sharp/1.0/net45”, we first checked if it was already on our list (it wasn’t). Then, we looked at it against known software (it was a RemotePC connection), checked how often it was used (it was only on one host and had a lot of activity), and then moved on to “Further Investigation”. That’s when we found out that someone was using unauthorized RMM software. Remember, this tree is just a way for me to organize my thoughts, not a strict rulebook. Over time your environment and goals will guide you on how to approach each decision point.

These findings highlight the value of user agent analysis in threat hunting. The discovery of both benign (Grammarly) and potentially concerning (RemotePC) connections tell us the necessity of understanding that anomalies are just that—anomalies. Manual investigation, further research, and correlation with other data sources are required to figure out why an anomaly exists and what it means in our environment. This helps us find more interesting results and data for further analysis. Hopefully, it was helpful how user-agent analysis in combination with statistical anomalies can help each other to uncover hidden activity, linking unusual patterns and some ideas on how we can approach user-agent analysis.