Recently Fortinet released an advisory stating two CVEs (CVE-2024–55591 and CVE-2022–40684) were actively exploited in the wild. I started looking from a Threat hunt perspective but as there are few details in the advisory to map out the overall picture — I began to turn to adversary infra analysis in hopes of finding connections to expand observables and patterns. From IOCs from a Fortinet advisory, it expanded to correlation with Arctic Wolf’s “Console Chaos” research and other pivots with potential connections to the Core Werewolf (also known as PseudoGamaredon or Awaken Likho) threat actor group.

Fortinet Advisory

I spilled my coffee a bit when I first looked at the Fortinet advisory mentioning the usual suspects — loopback addresses and Google DNS. But then re-reading the advisory and these in context gave me some perspective and understood why it was mentioned. While acknowledging the context in which these can be important but ignoring the “values”, I focused on the other IOCs in the report.

Thanks for reading! Subscribe for free to receive new posts and support my work.

Understanding Infra Analysis

Infra-analysis is not entirely part of threat hunting but it aids in threat hunt research. So the scope of it is different from let’s say a threat intel OR cybercrime analyst. This analysis helps us to examine how systems, services, and networks that adversaries may use to conduct their operations and help us understand:

Tools and techniques adversaries use within a cluster or campaign

- Operational patterns and preferences

- Potential connections to known threat actors/group’s clusters/campaigns

In this article, I will focus on several “breadcrumbs” of infra analysis we can follow:

1. Service Fingerprinting: Examining unique identifiers of services running on different ports

2. Historical DNS Resolution: Understanding domain name patterns and relationships

3. SSL Certificate Analysis: Identifying relationships through shared certificates

4. Network Patterns: Looking for patterns in how services are deployed

5. ASN Patterns: Understanding the adversary’s preferred hosting providers and regions

Note: Adversary infrastructure tracking is complex with several layers within the group and between groups. So, the connections we make solely based on network observables may not be accurate to “make” connections. That being said, it is still valuable to understand suspicious ASNs, “commonalities” between groups, tools we uncover, and several other reasons.

All this to say, take this with a grain of salt in terms of “connections” but enjoy the things we uncover and learn along the way.

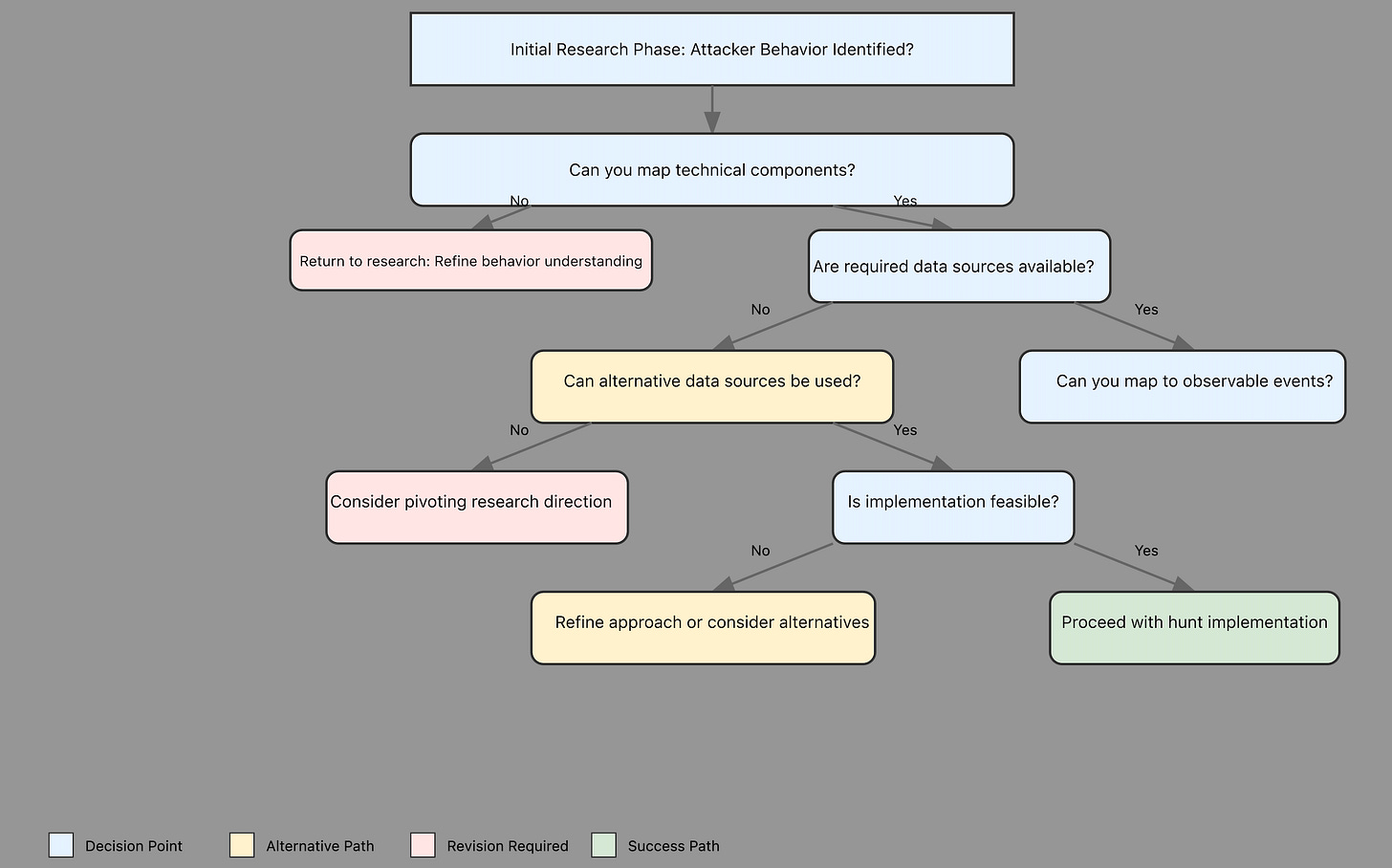

Pivoting structure

I would like to have some structure so we can expand our pivots to multiple points while still validating them. Here is my approach from Threat Hunt perspective.

1. First-Order Analysis: “Staying close to the source”

- I prefer direct IOCs from the primary source that kicked off the analysis (Fortinet advisory)

- Identify ports and services and pivot from there

- Check SSL certificates to find past and future patterns

2. Correlation: For any additional references we find for the above, we should

- Check the findings and timeframes of the research

- validate TTPs if available

3. Expand Infrastructure:

- Use the patterns to see if we can find similar infrastructure (not all here can have high confidence unless there are solid connections)

- Check historical DNS, certificate, and ASN data from the previous two steps

We are talking about the same thing: Arctic Wolf

when searching for IOCs from Fortinet’s advisory, I came across Arctic Wolf’s “Console Chaos” blog and saw three IP addresses matched. One thing I always like to check is if they are connected to each other by analyzing TTPs — which matched too in this case. So, we can connect words we initially observed like “exploited in the wild” to actual findings from the intrusion set. This is not technically a pivot but it is always good to correlate our dataset with multiple sources.

My failures: VPS Scanning Infra

I started digging into the overlapping IPs but it did not reveal much as most of these IPs were on VPS providers like DigitalOcean and Datacamp, and from the “Console Chaos” blog, these are connecting to the HTTP interface for likely confirmation purposes.

1. 45.55.158.47 has below both do not provide any more pivots just from these fingerprints:

45.55.158.47 (DigitalOcean):

- Primary Purpose: Reconnaissance

- Service Configuration:

- Port 22: SSH (Fingerprint: cb889df05d1536cc39938ac582e1eca1090e3662a270abff55ca6b119f2f11e0)

- Port 3306: MySQL (Fingerprint: e77fd0556cd803fa2613509423aaa86d34dda37e19cc2cfce7145cd9b1f5ff6a)

It’s the same for 37.19.196.65 and 155.133.4.175 and a few others. We like to see results in infrastructure analysis from all pivots but most of the time we just don’t or we may find something irrelevant.

2. 87.249.138.47 has:

- appears to be from compromised synology (quickconnect.to)

3. 167.71.245.10 has:

- Port 22: SSH (Fingerprint: b0ac2b8a274766825b0588520b9154995c3301cd8ae9a0020621af1529ea9179530)

- Port 3389: RDP

- Historical: emojis.soon.it (October 2024)

Possible C2: Something Interesting but for Future

Moving on the list, 157.245.3.251 has some interesting pivots although did not produce solid results.

- Operating System: Ubuntu Linux

- Service Layout:

- Multiple SSH services (both standard and non-standard ports):

- Port 22 (Standard)

- Port 222 (Alternative)

- HTTP services via Nginx on high ports (30000–30005)

- Historical ports (2024–09–09 and 2024–09–10): 9527, and 9530 returned empty responses but the timeframe was still in our research range.

We can craft a query to find everything on those ports and return empty responses but I did not find any. Regardless, it is an interesting pivot we can query in the future, in case it returns back.

(((services.port:{22,30002,30003,30004,30005})

AND (services:HTTP and services:SSH))

and autonomous_system.name: "DIGITALOCEAN-ASN"

and services.banner_hashes: "sha256:97f8ff592fe9b10d28423d97d4bc144383fcc421de26b9c14d08b89f0a119544"

and services.tls.certificates.leaf_data.issuer_dn: "C=US, O=Let's Encrypt, CN=R11")

and services.software.vendor='Ubuntu'

This query is to identify infrastructure with a similar pattern:

- Multiple HTTP services on specific high ports

- SSH presence

- Specific SSL certificate patterns

- Ubuntu-based systems

USPS Phishing Infra

When looking at 23.27.140.65, I found what looked like a USPS phishing lure from historical domains. This can be of interest here as it could be either for phishing for initial access with a focus on package tracking themes from the group itself or usage of possible Initial Access Broker (IAB) for that purpose. It’s hard to tell just from network observables but nonetheless, we have 102 domains used in the past 9 months.

- Timeline: July-December 2024

- Target Focus: USPS-related services

- usps.packages-usa[.]com

- usps-do-track[.]com

- usps.packages-ui[.]com

- and many more

The Core Werewolf Connection:

My most interesting discovery so far related to a previous campaign was the investigation of 31.192.107.165 (ORG-LVA15-AS). According to bi-zone, this network observable was previously linked to Core Werewolf (around October 2024), which matched our timeframe, too.

- Domain: conversesuisse.net

- Server — Werkzeug/2.2.2 Python/3.11.8

- MySQL 8.0.35–0ubuntu0.22.04.1

- Redis on 6379

- SSH on 6579

I spent some time looking for patterns mentioned in the blog in the same ASN and found about 49 IP addresses running Telegram-related content (one of the C2 mentioned).

Some other things that stood out are related to Silver C2 and Bulletproof services.

- 141.105.64.204

- services.ssh.server_host_key.fingerprint_sha256: f8431322831c2508255655d6d4b14802e68be656cec9189f109eb386032e99be

- 3524 results — not all possibly related to the infrastructure but a few interesting ones I found related to silver C2 and about 2,216 are from bulletproof services.

Staying within our bounds

149.22.94.37 revealed connections to an executable on VT

- PDF-themed executables probably via SEO Poisoning (PDFs Importantes.exe)

- Persistence via Registry modification for startup:

%windir%\System32\reg.exe add "HKEY_CURRENT_USER\Software\Microsoft\Windows\CurrentVersion\Run" /v "KeybordDriver" /t REG_SZ /d "\"%APPDATA%\Windows Objects\wmimic.exe\" winstart" /f

- Malware dropped as likely second stage

- - C:\WINDOWS\system32\wmihostwin.exe

- - C:\WINDOWS\system32\wmiintegrator.exe

- - C:\WINDOWS\system32\wmimic.exe

- - C:\WINDOWS\system32\wmisecure.exe

- - C:\WINDOWS\system32\wmisecure64.exe

- Network enumeration with net view commands

What does this mean? Well, nothing, because if we check the creation date 2017–03–26 05:14:46 UTC and submission date 2020–09–25 15:34:52 UTC, it is far off from our research bounds and might be linked to some other cluster/campaign. Can there be a connection? Probably. Can we make that connection now? Well, no, because of the timeframe and no other evidence to support it.

Infrastructure Relationship thus far

The investigation revealed several distinct but possibly (some are very distant) interconnected infrastructure components:

1. Scanning: for target identification and reconnaissance

2. Phishing: Likely for credential harvesting and initial access

3. C2: Things we can look to connect in the future or at least have them in our dataset to extract more insights like C2 configs, ports, etc

4. Campaign: Core Werewolf (AKA PseudoGamaredon, Awaken Likho) threat actor group relationship during the same timeframe. It requires additional patterns and observables to strengthen our intuition.

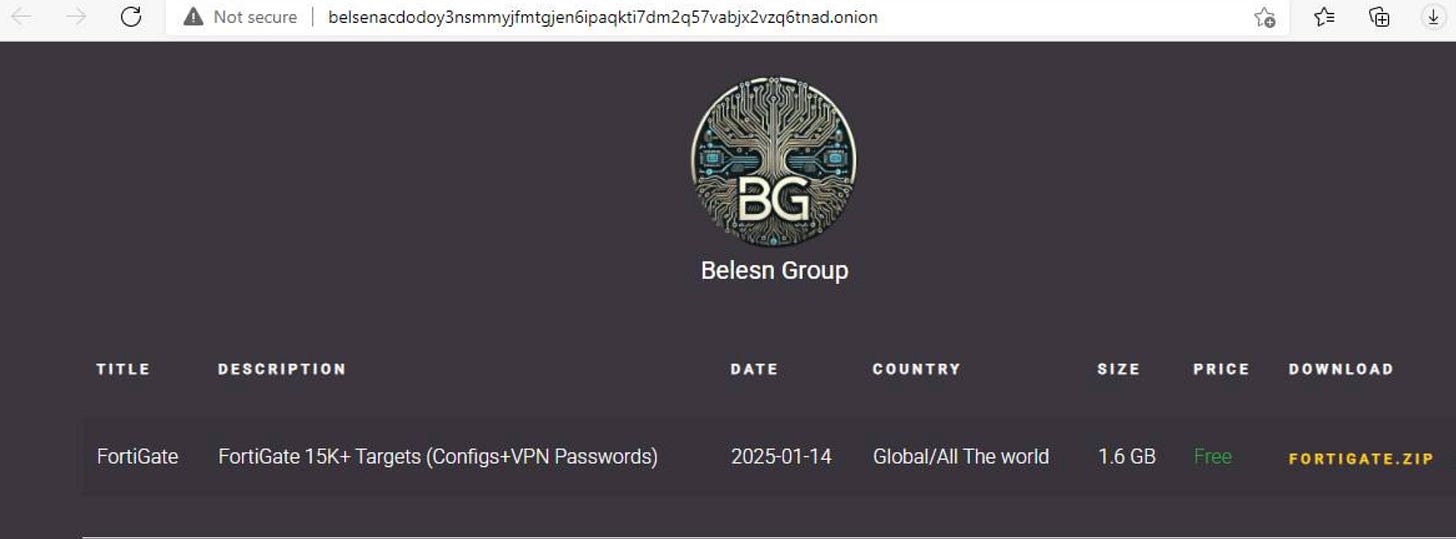

It’s the timing

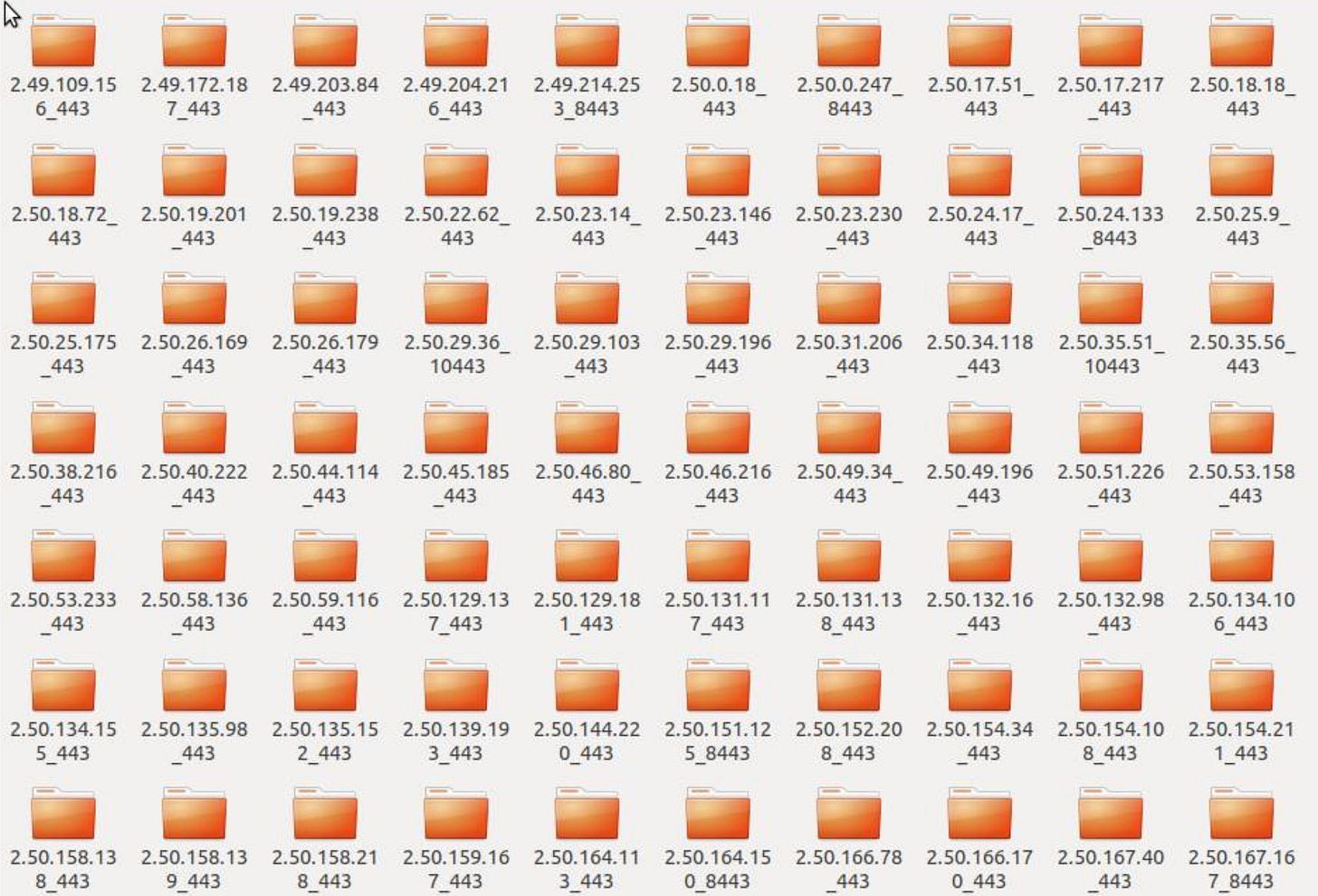

In the middle of all this, I came across a social media post from “Belsen_group” claiming to have credentials and configs. They published this as “free” and organized it via country “to make it easier for you”. I checked their site and the data to get an overall sense to see if it is related to current exploitation. I will not go a lot into it as Kevin Beaumont beat me to it. According to Kevin, these are from 2022 for CVE-2022–40684 exploitation and not this one. It’s just the timing and you can find the article at https://doublepulsar.com/2022-zero-day-was-used-to-raid-fortigate-firewall-configs-somebody-just-released-them-a7a74e0b0c7f

Several passwords I observed, one might say, are weak in nature. They are mostly have patterns of

1. Simple 4–8 char

2. Passwords are a variation of the username of variation of VPN

The IPs as part of the exposure are provided by Amram Englander on their GitHub https://raw.githubusercontent.com/arsolutioner/fortigate-belsen-leak/refs/heads/main/affected_ips.txt, so feel free to check them and change config accordingly.

Why bother then

If nothing is related, why publish this? My only intention was to provide how infra analysis can help threat hunters at times and show what I’ve learned:

1. Patience — a lot of dead ends, so, stay focused

2. Finding pivots — No pivot is a sure shot and staying in bounds during our pivots. We should always understand why infra is structured in certain ways and how it can be used.

3. Using multiple data sources — certificates, DNS records, and service fingerprints from multiple platforms is always a good idea.

For anyone starting out in infrastructure analysis, remember to document everything (especially the failures) to help in the future and stay excited.

References

1. https://arcticwolf.com/resources/blog/console-chaos-targets-fortinet-fortigate-firewalls/

3. https://www.facct.ru/blog/core-werewolf/

4. https://securelist.com/awaken-likho-apt-new-implant-campaign/114101/

Thanks for reading! Subscribe for free to receive new posts and support my work.