Recently, after discussions with folks, I realized there may exist few challenges in threat hunting, especially related to threat hunt research. These folks understand that threat hunting is a proactive approach to uncovering threats, but they feel their approach is scattered and inefficient, leading to inconsistent results despite spending much time. They felt every hunt was like starting from scratch and were unable to build from their previous hunts or scale their process effectively. I think one of the possible root causes might be the lack of a structured approach to threat hunting research. Without this structure threat hunting will become a game of chance rather than a systematic process.

But threat hunting research can be an efficient and repeatable process to deliver consistent results. To achieve that we need to understand and implement a structured methodology, especially for the research phase that can navigate us in all other phases of threat hunting process.

Threat Hunt research is all about figuring out how adversaries operate and approaches to uncover their presence but it is also the foundation to conduct effective hunts. It's a process of understanding adversary behaviors, mapping them to observable events, exploring hunt hypotheses, and understanding environmental factors with structured methodologies, frameworks, and approaches. Hunt research is the foundation that helps us (threat hunting teams) go from an ad-hoc activity into a repeatable, scientific process.

I will attempt to compare threat hunting research to DFIR (Digital Forensics and Incident Response) drawing from my experiences whenever possible.

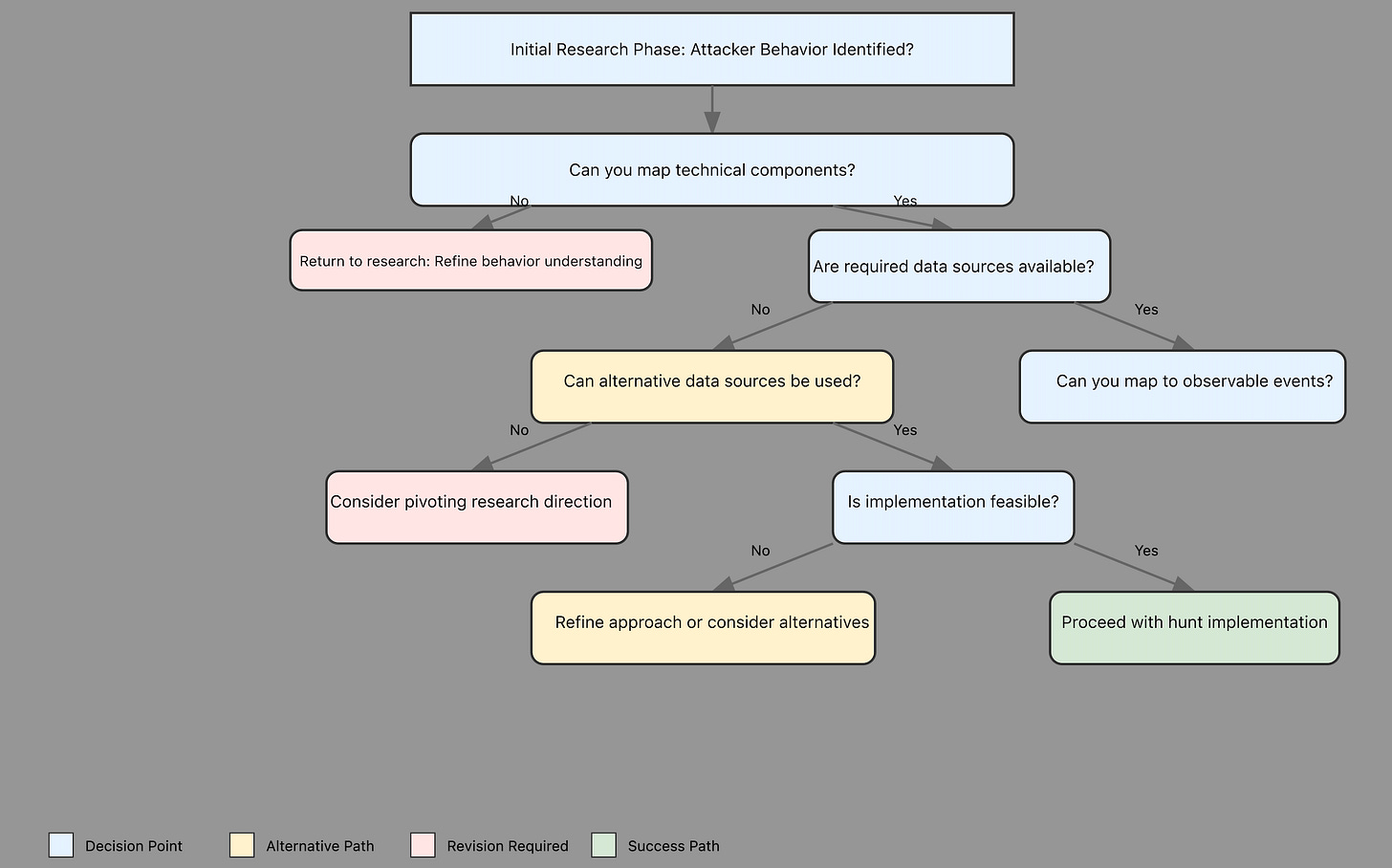

TLDR: Here is the diagram that might help you in threat hunting research

Threat hunting research is similar to Digital Forensics and Incident Response (DFIR), in the latter folks look into incidents after they happen and the former find patterns before potential breaches or try to identify patterns deviating from the environmental norms. But the way we investigate is similar. Knowing these similarities can help us better approach threat-hunting research.

1. Initial Research

Just like DFIR investigators plan their investigations before they start, the hunting research phase starts by planning and thinking about what they might find (consider the A.P.E.X. framework to see how). In DFIR, investigators might ask, What systems were probably compromised? and What clues would show that they were?

Similarly, in threat hunting research we should ask,

What specific attacker behavior am I investigating?

What does the attack chain look like?

What technical components make up this behavior?

What does a successful execution of this behavior look like?

The planning phase is important for both because it helps us understand the overall picture. DFIR investigators create timelines of events and figure out the scope/pivots, while threat hunting research makes maps of the past attack patterns of an adversary and figures out the possible patterns of the adversary behavior in the future to formulate possible hypotheses, hunt pipeline to better assist/enhace existing detections.

2. Data Source Analysis:

DFIR investigators find and record important evidence when they investigate an incident. They determine which sources, artifacts, and means might contain evidence of the incident. Threat hunting research do something similar when they examine data, but with a proactive twist. Teams sometimes spend too much time to figure out which data sources might contain relevant information at the time of the hunt. Instead, research should include a systematic approach to map adversary behaviors or patterns with relevant, available data sources and fields before even beginning the hunt. It will help us understand not just the data sources we need but the categories in those data sources along with their fields. We need to know:

Where the relevant data is in the environment

How the data is formatted and structured

How long it’s supposed to be kept and how it’s collected

How different data sources are related

3. From Artifacts to Activities:

While IR investigators piece together clues from various sources to figure out what happened during an incident, threat hunt research does the same thing, but in reverse. We try to guess what artifacts and patterns would be left behind by specific adversaries/techniques/tactics based on our hypothesis. The main difference is that DFIR looks back to figure out what happened, while we try to look forward to predicting future attacks. However, the intelligence gathered by both can help us understand attack patterns and use them to detect future attacks.

The key difference between hunters who feel like random searching and the ones who do structured hunting is hypothesis generation.

A good hypothesis in threat hunting research: (Check out the Hunting Opportunities section here)

Focuses on a specific attacker behavior or technique

Links to concrete, observable events in the environment

Accounts for variations in how the behavior might manifest

Considers the context of environment and normal operations

Includes clear criteria for validating or disproving the hypothesis

4. Environmental Analysis

DFIR teams need to know the environment they’re investigating which includes the network architecture, security controls, and business context (which might not apply to consulting or MSSP). In an effective threat hunting research, we know that no two environments are identical and what works in one organization might not work in another due to differences in:

Available data sources and logging configurations

Infrastructure complexity and distribution

The security tools that are deployed

The typical patterns of business activity

The limitations and blind spots in logging and monitoring

This understanding helps DFIR teams spot unusual activity and helps threat hunting research distinguish between normal and potentially malicious/suspicious activities. Knowing these environmental factors also helps us to develop both effective and efficient hunts.

5. Documentation

DFIR investigations require extensive documentation – from initial findings through final conclusions. This documentation becomes a treasure trove for future investigations, helping teams spot patterns across multiple incidents. Threat hunting research is also not a one-time effort - it's an evolving process that builds upon itself which in turn refines methodology rather than specific incidents. Each hunt provides new insights, reveals gaps in visibility, and helps refine future hunting approaches. Maintaining detailed documentation of our research, including both successes and failures, is crucial for building a mature hunting program. Both types of documentation have some things in common:

Detailed technical findings and observations

Analyzing patterns and behaviors

Figuring out how different pieces of evidence are connected

Recommendations for future investigation or hunting

Research —> Hunt

The reason we did all the research is to hunt in our environment. It is also a catch-22 - Do we figure out what to research first and then hunt? or Do we figure out what to hunt and then kick off research? This transition from theoretical research to practical hunting is often time-consuming, and resource-intensive. It also requires several things to fall in place to bridge the gap between knowledge and action.

Clear documentation of hunting methodology - not observable

Specific queries or search parameters from the 2, 3, 4, and 5 steps

Success criteria and metrics

Plans for handling findings - from clear security incidents to suspicious but irrelative (to our hypothesis) findings to new hunts

Validation

DFIR teams and threat hunting researchers both know that false conclusions can be expensive. They both validate their findings through multiple data sources and analysis techniques - sometimes it requires simulating the behavior in a test environment. Threat hunting research requires thorough validation of hypotheses and methodologies.

Testing assumptions, queries, and hunt methods against known-good data or "normal behavior"

Verifying findings through multiple sources along with observable events actually indicate the behavior we are hunting for

Considering alternative explanations

Documenting limitations and caveats

Adaptability

Just as DFIR teams must adapt their techniques/methodologies to new types of attacks, evolving tech, and complex environments, threat hunting research needs to keep getting better. Both face similar challenges:

Changes in attacker techniques and tools

Evolution of enterprise technology and architecture

changes in security controls and monitoring

Building flexibility into our research methodology allows us to:

evaluate new sources of evidence and data

Scale across growing environments

Integrate new tools and technologies or refine the ones according to our requirements.

Conclusion

Hopefully, this parallel between DFIR and threat hunting provided valuable insights into threat hunting research. Both require systematic approaches, detailed technical understanding, and the ability to adapt to changing circumstances. By applying a similar standard methodology of DFIR to threat hunting research, organizations can make their hunting programs even better. There is always room for creativity and innovation in this structured approach and methodologies as threat hunting is both an art and a science.

No comments:

Post a Comment